Hello and welcome back! In our last post, we successfully built the heart of our homelab: a resilient ZFS NAS that serves files across our network. Our data now has a safe place to live, but a vault is only as good as its security and contingency plans. Now, we’re going to make that data invincible.

This post is all about automation and protection. We will build a multi-layered, automated defense system to guard against everything from accidental file deletion to a full-blown disaster. We’ll be following the classic 3-2-1 backup rule: at least 3 copies of our data, on 2 different media types, with 1 copy located offsite.

Before we build our defenses, let’s quickly recap the structure of our data vault, which we organized into several ZFS datasets, each with a specific purpose:

| ZFS Dataset | Content | Sharing Method |

|---|---|---|

| /ssd/archive | Nextcloud, Immich, & Jellyfin data | NFS |

| /ssd/backup | Proxmox VZDump Backups | N/A |

| /ssd/cloud | Small sensitive data for cloud sync | rclone |

| /ssd/docker | Docker-compose & config backups | rsync |

| /ssd/share | Temporary shared files | Samba (SMB) |

| /ssd/sync | Synced notes, password DB, etc. | Syncthing |

ZFS Dataset Organization

Let’s dive in and automate our defenses.

The Watchful Guardian: Automated Monitoring and Notifications

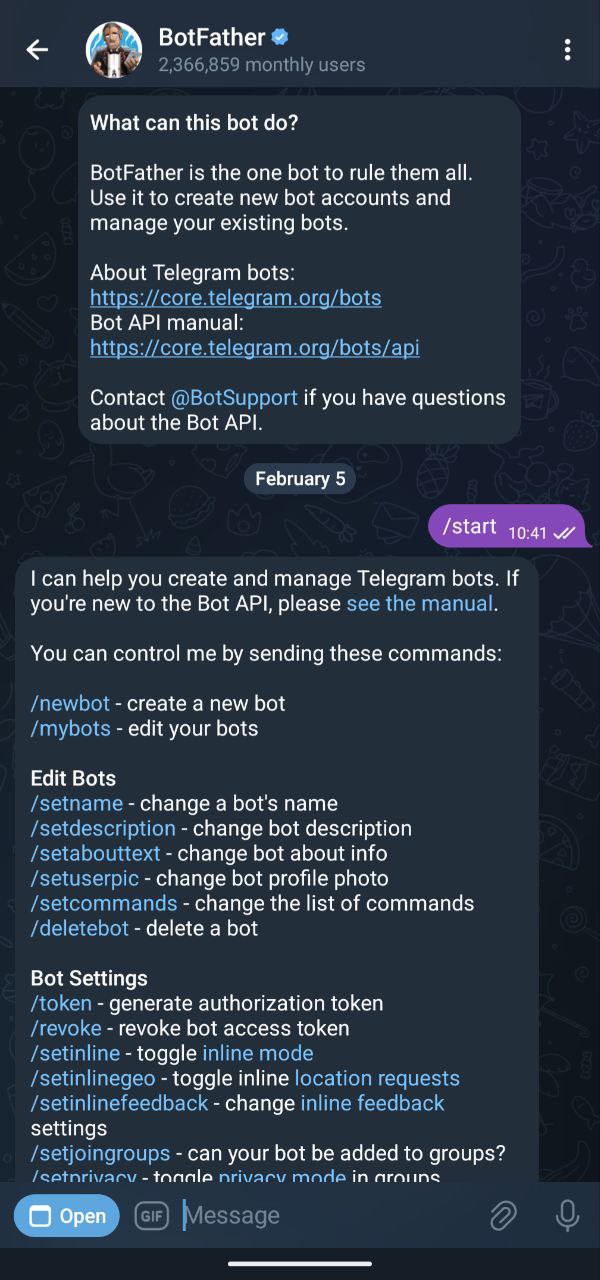

Before we build our alarms, we need a way to hear them. An automated process is useless if you don’t know whether it succeeded or failed. For this, I set up a Telegram bot to send instant notifications to my phone.

Setting up the bot is simple using Telegram’s BotFather, this is the procedure.

- Open Telegram and search for BotFather.

- Start a chat with BotFather and send the following command:

1 | /start |

- Create a new bot by sending:

1 | /newbot |

Follow the prompts to:

Choose a name (e.g.,

Home)Choose a unique username (e.g.,

home_bot)

BotFather will reply with a link to your new bot and a bot token.

Open the link from your phone to start a chat with your bot.

Press Start and send any message to initialize the conversation.

From your PC, retrieve your chat ID by making a

getUpdatesAPI request using your token:

1 | curl -s "https://api.telegram.org/bot<token>/getUpdates" |

In the response, locate the

chat.idfield. This is yourchat_id.Test sending a message using the

sendMessageAPI:

1 | curl "https://api.telegram.org/bot<token>/sendMessage?chat_id=<chat_id>&text=Hello" |

The Telegram bot is now set up and ready to be used in scripts for sending notifications.

Defense Layer 1: Data Integrity and Local Snapshots

Our first line of defense protects the data living on the NAS itself. This involves two automated scripts that run on the Proxmox host.

- Daily Health Check:

This script runs every morning to check the status of the ZFS pool. If it ever detects a “DEGRADED” state (e.g., due to a drive failure), it immediately sends a high-priority alert to my Telegram bot, letting me know that a disk needs attention and maybe needs to be replaced.

1 |

|

- Automated ZFS Snapshots:

ZFS snapshots are instantaneous, read-only copies of a dataset. They are the perfect defense against ransomware or accidental file deletion. I wrote a comprehensive bash script that runs every night via cron to manage them.

Key Features of the Snapshot Script:

Creates Snapshots: It automatically creates daily, weekly, monthly, and yearly ZFS snapshots of my critical datasets (

archive,docker,share, etc.).Enforces Retention: It automatically purges old snapshots based on a defined policy (e.g., keep 7 daily, 4 weekly) to save space, cleverly ensuring it never deletes the last snapshot that was sent offsite.

Reports Status: After every run, it sends a summary to my Telegram bot, confirming that the snapshots were created successfully or alerting me if an error occurred.

1 |

|

Defense Layer 2: System-Level Backups

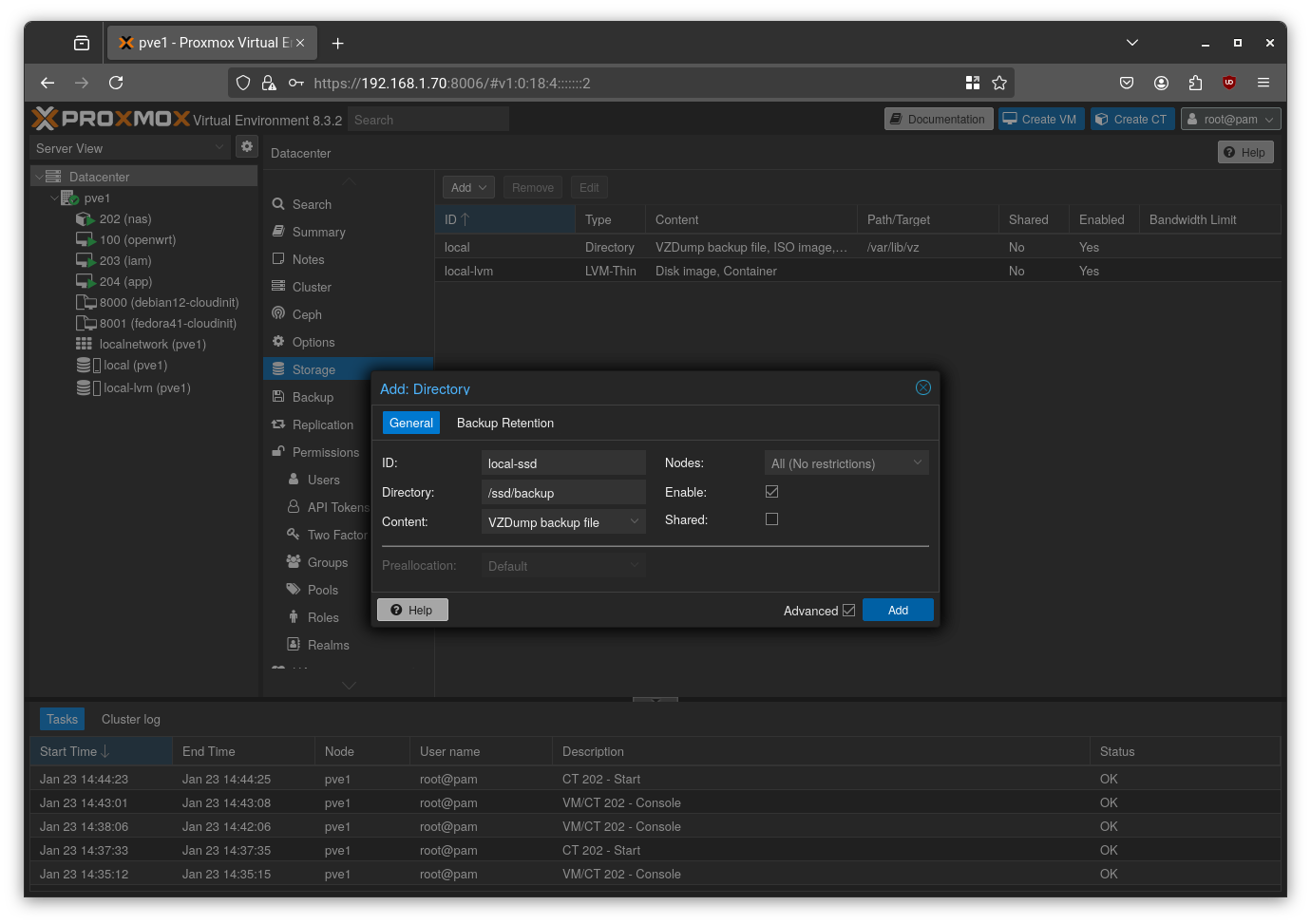

While snapshots protect the data within the datasets, we also need to protect the machines themselves. For this, I use the built-in Proxmox Backup feature.

I configured a backup job in the Proxmox web UI that runs every night. It creates a compressed backup of all my essential VMs and LXC containers (including the NAS container itself) and stores them on the ssd/backup ZFS dataset. If a VM ever becomes corrupted, I can restore it to a known good state in minutes.

The process here is straightforward. In the Proxmox web UI, go to Datacenter > Storage, click the Add button, select Directory, and configure it as needed. I configured it as shown in the figure below.

To further improve backup management, I decided to create a dedicated ZFS dataset to store a copy of the home folder from each machine. These home folders will include Docker Compose files for the installed services, along with their configuration files. To automate this process, I chose to use rsync.

Each new machine will run a cron job that backs up its entire home folder (including Docker Compose files and configuration data) to the NAS, under the path:/ssd/docker/<machine_name>

- Install

rsync

Install rsync on both the NAS and the machine whose data you want to sync:

1 | sudo apt update |

- Generate SSH Key on the Source Machine

On a machine running Docker (e.g., iam, app, etc.), generate an SSH key:

1 | # Generate an SSH key (no passphrase) |

This will create a key pair in /root/.ssh/.

Copy the public key to the NAS by adding it to the ~/.ssh/authorized_keys file of the NAS:

1 | ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIIJWFqnXs/KhNnRzciiIUkja1Zklz5ru5O7DY1w9qFEu root@app |

- Test

rsyncSync

From the source machine (as root), run the following command to test the sync:

1 | rsync -avz -e 'ssh -v' --delete /home/user root@10.0.20.2:/ssd/docker/app |

Note the debug line that starts with:

1 | debug1: Sending command: rsync --server -vlogDtprze.iLsfxCIvu --delete . /ssd/docker/app |

We will use this exact command in the next step to restrict SSH access for security purposes.

- Harden SSH Access on the NAS

To restrict the allowed SSH command, open /root/.ssh/authorized_keys on the NAS and modify the corresponding line as follows:

1 | command="rsync --server -vlogDtprze.iLsfxCIvu --delete . /ssd/docker/app",no-pty,no-agent-forwarding,no-X11-forwarding ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIIJWFqnXs/KhNnRzciiIUkja1Zklz5ru5O7DY1w9qFEu user@app |

This ensures the remote machine can only run the specified rsync command and nothing else via SSH.

See this guide for more details on securing rsync over SSH.

- Automate with a Cron Job

Finally, add the rsync command to the root user’s crontab on the source machine:

1 | sudo crontab -e |

And add the following line to run the backup every day at midnight:

1 | 0 0 * * * rsync -avz --delete /home/user root@10.0.20.2:/ssd/docker/app |

Defense Layer 3: Offsite Disaster Recovery

This is the cornerstone of the 3-2-1 rule and protects against catastrophic events like fire, flood, or theft.

My offsite backup solution consists of a Raspberry Pi with a large external USB hard drive, located at my parents’ house approximately 400 km away. I installed Ubuntu and ZFS on the Pi and established a persistent WireGuard VPN tunnel for secure communication with my home network.

For enhanced security, the ZFS pool at the offsite location is encrypted using a passphrase that is never stored locally on the Raspberry Pi’s SD card. Instead, the encryption key is transmitted remotely and held in memory only during the backup process.

Initial Setup of the Raspberry Pi

The Raspberry Pi will serve as the offsite backup host. I’ll connect an external USB drive to it and format it using ZFS.

Step 1: Flash a Linux Distro

Use the official Raspberry Pi Imager (rpi-imager) to flash Ubuntu onto the SD card. During setup, you can:

- Create a new user

- Enable SSH and configure SSH keys

- Set up Wi-Fi (if needed)

After flashing, insert the SD card into the Pi and power it on.

Step 2: Connect via SSH

Once the Pi has booted:

1 | # Find the Pi’s IP address |

Step 3: Create WireGuard Keys

Generate a WireGuard key pair on both the NAS and the Raspberry Pi.

Then, on your OpenWRT router, configure a new WireGuard VPN tunnel. Harden it so that only the NAS can access the Raspberry Pi, which will automatically connect on boot. Allow access only to SSH (port 22).

Step 4: Configure WireGuard on the Raspberry Pi

On the Raspberry Pi, create the WireGuard configuration file:

1 | sudo nano /etc/wireguard/wg0.conf |

Paste the configuration from your OpenWRT router (adjust as needed). Then enable the VPN tunnel at boot:

1 | sudo systemctl daemon-reload |

Setting Up the Encrypted ZFS Pool

⚠️ Important: ZFS encryption on the Raspberry Pi is independent from any encryption used on your Proxmox or NAS systems. It’s best to manually recreate the datasets before sending data with

zfs send/receive.

Step 5: Format the External Drive with ZFS

Connect the external HDD to the Pi and format it:

1 | # Identify the disk (e.g. /dev/sda) |

Now create the encrypted ZFS pool:

1 | sudo zpool create \ |

Step 6: Create ZFS Datasets

Create specific datasets for different backup purposes:

1 | # Personal data (e.g., Nextcloud, Immich, Paperless) |

SSH Key Authentication

Step 7: Set Up SSH Keys

On both the Proxmox host and NAS, generate SSH key pairs:

1 | ssh-keygen -t ed25519 |

Then:

Copy the Proxmox host’s public key to the Raspberry Pi’s

/root/.ssh/authorized_keys.Copy the NAS public key to the Proxmox host’s

/root/.ssh/authorized_keys.

Step 8: Enable Root SSH Access on the Raspberry Pi

Edit the SSH server configuration:

1 | sudo nano /etc/ssh/sshd_config |

Uncomment or add the following line:

1 | PermitRootLogin prohibit-password |

Then restart SSH:

1 | sudo systemctl restart ssh |

Off-site copy

The magic here is zfs send/receive. I wrote a third automation script on my Proxmox host that performs the offsite backup every night:

Connects Securely: It establishes an SSH connection to the Raspberry Pi over the WireGuard VPN.

Unlocks the Remote Vault: It securely sends the encryption key to unlock the remote ZFS pool.

Identifies Changes: It compares the latest local snapshot on my NAS with the last snapshot successfully sent to the offsite location.

Sends Incrementally: It uses

zfs send -ito send only the data blocks that have changed since the last replication. This is incredibly efficient and minimizes bandwidth usage.Locks Down and Shuts Down: After the transfer is complete, the script instructs the remote Raspberry Pi to lock the ZFS pool (ejecting the key from memory) and then shut down completely to save power and reduce its attack surface.

Reports Status: Finally, it sends a detailed report to my Telegram bot.

1 |

|

This setup ensures that I always have a recent, full, and encrypted copy of my critical data in a completely different geographical location.

Conclusion: A Fully-Armored Homelab

Our infrastructure is now complete and, more importantly, fully secured. We have a hypervisor for our machines, a segmented network for secure communication, a resilient vault for our data, and a multi-layered, automated system to protect it all. The foundation is rock-solid.

With the confidence that our data is safe, we can finally move on to the most exciting part of this journey: deploying the user-facing applications we need.

In the next post, we’ll begin this new phase by installing and configuring FreeIPA and Authentik: two components that will simplify identity management within our environment.

Stay tuned! 🚀